Link to the Viral Texts Project: https://viraltexts.org/

The Viral Texts Project is an online depository for cross-disciplinary research on 19th century newspaper publishing. The purpose of the project is to develop a resource for scholars to understand how and why certain texts proliferated throughout the population in the 19th century. The development of this project is guided by an interest in the material history of textual production that highlights the role of copying, exchange and networks of circulation to help scholars answer the question, “how did newspaper ideas disseminate through networks, and become ‘viral’?” This “bottom-up bibliography” (Smith et al. use this language in Chapter 4 of the their book proposal for Going the Rounds) approach privileges “texts” over “works”, focusing on content that typically goes unnoticed by literary scholars and historians such as love letters, vignettes, and how-to articles.

The research is presented through data, data visualization, two interactive exhibits, several scholarly articles, and an (unfinished) manifold publication titled Going the Rounds: Virality in Nineteenth-Century American Newspapers. The project is a collaboration between computer scientist David Smith, Assistant Professor of English Ryan Cordell and two graduate students (one in history, and another in english). The project is supported financially by Northeastern’s NULab and the NEH.

The interdisciplinary nature of the Viral Texts Project lends itself to some interesting results, however the open-ended nature of the project exacerbates existing issues with the interoperability of the technology it uses. There are also interface issues with the website itself as well. These problems highlight the challenges facing scholars who wish to develop collaborative digital projects.

The central achievement of the Viral Texts project is David Smith’s development of a “reprint-detection algorithm” that combs the library of Congress’ Chronicling America newspaper archive and the Making of America magazine archives and collects the most reprinted articles. This restructuring of data is valuable for the scholar by gathering in one place information spread across different databases. Popular articles reprinted during the antebellum era were usually amended slightly, or given different titles, making it difficult for scholars to find duplicates in the archives (this is further compounded by the fact that indexes are often different depending on the data-base). Smith’s algorithm accounts for these problems by using computational linguistics to detect certain duplicate words indicating a reprint. The data culled from this work is shared openly on a Github page linked to the project’s website; the page is unfortunately difficult to navigate, not easily searchable and requires digging through different .csv files to locate datasets. Although a scholar with technical knowledge may be able to find what they’re looking for, easily downloadable datasets are seemingly not available.

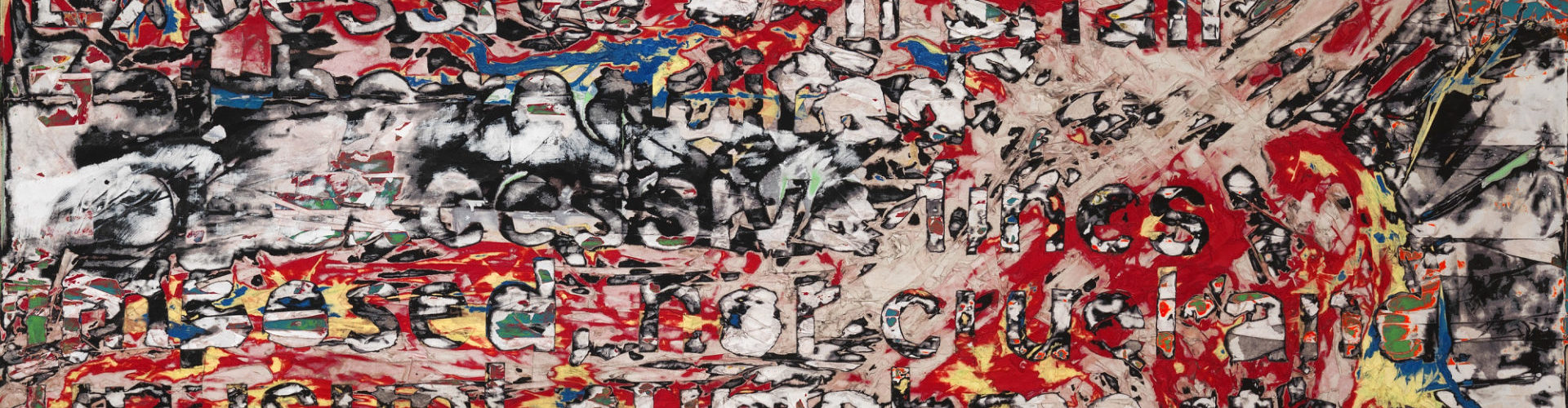

On the site, scholars also have access to a wide range of “network-graphs” to visualize the data collected by Viral Text Scholars researchers. These graphs visually represent the linkages between newspapers. The project also provides graphs demonstrating the path of particular texts during the time. Although one can drill down into the data a bit, one can’t quickly pull up the articles most shared. Few annotations or editorial notes accompany the data. What annotations do exist point to internal language. Although, this data alone is of tremendous use to scholars and computer scientists interested in mapping the passage of texts, to be of use to non-scholars, additional context annotations could be interesting.

The interactive exhibit portion of the site allows users to browse a composite “front page” made up of the most commonly reprinted texts. The “Love letter exhibit” is the facsimile of a commonly reprinted poem, “A ‘stunning’ love letter.” Lines of the text are able to be clicked on to bring up annotations that reveal lines to be themselves reprints or near copies of other commonly reprinted articles. The project’s researchers write that these annotations provide a “portal into our larger dataset.” This exhibit is a fascinating look into the composite nature of texts produced in the 19th century and is a potentially valuable teaching tool, encouraging students to think about texts produced within networks.

The Viral Texts Project is a good example of how digital publishing “expands the toolset” of scholars (Fitzpatrick, 84). Rather than presenting a simple database containing facsimiles of antebellum texts, researchers at the Viral texts project, are expanding the notion of what a “text” is through computational tools. Literary scholars have much to gain by exploring the material context of textual production, the quantity of reprints and the path of texts through networks. In Going the Rounds, Ryan Cordell (and co-authors) explain how their research demonstrates the “industrialization of knowledge” during the 19th century. In this way, the project is a good example of what Bolter and Grusin call “remediation”, the way in which a new technological medium improves or remedies the failure of an earlier one (Bolter & Grusin, 59). The material history of the text is demonstrated through data visualization, where it was previously hidden (therefore a “fuller” picture of the text emerges). There is a wonderfully materialist aspect of this, as text is also an object (the “medium is the message”). This is a valuable way of teaching text as well. To have access to data that shows the path of a text or meme allows scholars to teach texts with an easily visualizable bibliographic information.

Viral Texts demonstrates the value of computational tools for getting the most out of the digital archives that exist, and reconceptualizing the role of the literary scholar to include quantitative analysis, data restructuring and modeling. The difficulty is to develop a project that is bounded and not endlessly open-ended. No aspects of the Viral Texts Project are tethered to the codex form of organization, and the website itself is not fashioned in a way that creates a legible narrative, or an easy to follow structure. This is not a failure so much as a testament to the challenge of working collaboratively on digital projects.